- Crypto

- July 8, 2025

Not all scams are easy to spot. Some are crafted to resemble familiar voices, trusted faces, and legitimate interactions. That’s what makes deepfakes especially dangerous.

Once seen as entertainment or tech innovations, deepfakes have taken a darker turn. Scammers are now using them to impersonate CEOs, relatives, or customer service reps. These aren’t low-quality videos anymore. The quality is convincing, and the results are costly. According to a 2023 report by Gartner, deepfake-based fraud is expected to cost over $250 million annually by 2026, as scammers continue to find new ways to bypass identity checks.

This article explains how deepfake scams work, where they’re showing up in finance, and what you can do to protect yourself.

What Are Deepfakes?

Deepfakes are fake videos, audio, or pictures that look real. They’re made using computer programs that copy the way someone talks, moves, or looks. The result can be so realistic that it’s hard to tell it’s not actually them.

At first, it was mostly internet fun, a celebrity face swapped into a movie scene, or a voice used in a parody clip. That’s where it started. But it didn’t stay there for long. As the tech got better, so did the ways to abuse it.

Now, deepfakes show up in places that matter. A video call from your manager, a voicemail from a relative, or a message from someone you trust. Except it’s not them, it just looks like them, and that’s the problem.

How Scammers Are Using Deepfakes in Finance?

Finance has become a major target. With deepfakes, scammers don’t need to hack systems; they just need to trick people.

One of the most reported tactics involves impersonating executives. In some cases, scammers send video messages or join live calls pretending to be a CFO or CEO. They instruct employees to transfer funds or share sensitive details. And because the person looks real, no one questions it until it’s too late.

In banking and crypto, some victims have received video calls from people they believe are account managers. These fake reps offer help with “investment upgrades” or withdrawals. The caller sounds knowledgeable, friendly, and trustworthy. But it’s a setup. Once the money moves, the platform disappears.

Scammers have even used deepfake audio to bypass voice verification systems. They call customer support using a generated voice that matches the real person’s tone and accent. That’s enough to reset passwords or access accounts.

Why People Fall for Deepfake Scams?

These scams don’t just rely on visuals; they use trust. People are wired to respond to familiar faces and voices. When someone looks and sounds like a known person, it creates a sense of safety. Scammers take advantage of that.

Deepfake tech also plays into urgency. The fake CEO might say, “This needs to happen in the next 10 minutes,” creating pressure. Victims don’t stop to think. They act fast, trying to help or follow through.

In some cases, the scam is emotional. A person receives a video from a loved one asking for money. The background looks normal, the voice is right, and the message seems urgent. But it’s fake, often stitched together from old videos and public posts.

It’s not about falling for something obvious. It’s about being manipulated by something designed to feel real.

Real-Life Deepfake Scam Incidents

The danger of deepfakes isn’t just something to worry about in the future; it’s happening now. People and businesses have already lost money because of them. More and more real-world cases are showing just how far scammers will go, and how believable these fake videos and audio clips can be.

The Hong Kong Bank Heist Using Deepfake Video

In early 2024, a Hong Kong-based employee at a multinational firm received a video call from someone who looked and sounded exactly like the company’s CFO. The “CFO” instructed the employee to transfer over $25 million to several international accounts for an urgent acquisition deal. Believing the request to be legitimate, the employee followed through.

Days later, it was discovered that the entire interaction had been staged using deepfake video and voice cloning. The scam was so advanced that multiple other executives had also been impersonated in pre-recorded clips to build credibility.

Crypto Trader Duped by Deepfake Account Manager

A London-based crypto investor reported being scammed after receiving a call from someone claiming to be his account manager at a major trading platform. The caller had a video feed, used the manager’s full name, and referenced recent trading activity.

Everything seemed in place until the platform suddenly went offline after he authorized a $300,000 transfer to a “new wallet” for a supposed upgrade. Authorities later found that the scammer had pulled public content from social media and interviews to build a custom deepfake persona.

Family Targeted with Emotional Scam Video

Recently in the Arizona, a mother got a video that looked like her teenage daughter was in trouble. The girl was crying in a room that the mom didn’t recognize, asking for money to make bail. It sounded just like her, same voice, same way of speaking, even using phrases only she would say.

Believing it was real, the mother transferred money to the account provided. Only after calling the school did she discover her daughter had been safe the entire time*-. Authorities believe the video was made using clips taken from the daughter’s public social media profiles.

Cases like this show how convincing fake videos and voices are being used to carry out emotional scams that target families directly.

5 Red Flags to Watch Out For

Scam videos and fake voice messages can seem incredibly real, but they usually leave behind a few strange signs—things that feel just a bit off if you’re paying attention. These small slips might not stand out at first, but they can make all the difference.

1. Something Doesn’t Match the Usual Behavior

Let’s say someone sends a video or voice clip, asking for money, login info, or something sensitive. Even if it sounds like someone you know, don’t jump in right away. Slow down. Contact them through whatever method you’ve always used, like their regular email or phone.

Fake conversations fall apart when you go off script or bring up something unexpected. That’s when things start to not add up.

2. The Timing Feels a Bit Off

Sometimes, the mouth doesn’t match the words. Or the person talks in a way that feels too perfect, with no pauses, no filler words, just smooth, fast speech. Real people don’t sound like that. There’s usually a rhythm, small stumbles, or a breath. If it feels too clean or the timing seems strange, that’s worth checking out.

3. You're Being Rushed or Told to Keep It Quiet

Phrases like “do this now” or “don’t tell anyone” are classic pressure tactics. That rush is intentional; it’s meant to stop you from thinking clearly.

A real request, no matter how urgent, usually allows time for questions or at least a short delay. If someone’s trying to isolate you and hurry you into action, be careful.

4 . They Want to Move the Chat Somewhere Else

If someone who usually emails you suddenly wants to switch to Telegram, Zoom, or Signal, and gives a vague excuse like “my email’s down”, pay attention. That switch could be about hiding their tracks.

Scammers don’t want to be recorded or traced. If they won’t stick to your usual communication method, that’s a solid reason to pause.

5. The Details Feel a Little Off

Even a good fake can mess up the small stuff. Maybe they mention a team that doesn’t exist anymore. Maybe they use a title that doesn’t fit. Sometimes the way they talk is just... not quite right.

It might be tone. It might be a phrase they wouldn’t normally use. If you get a weird feeling, don’t ignore it. Follow up and verify before doing anything else.

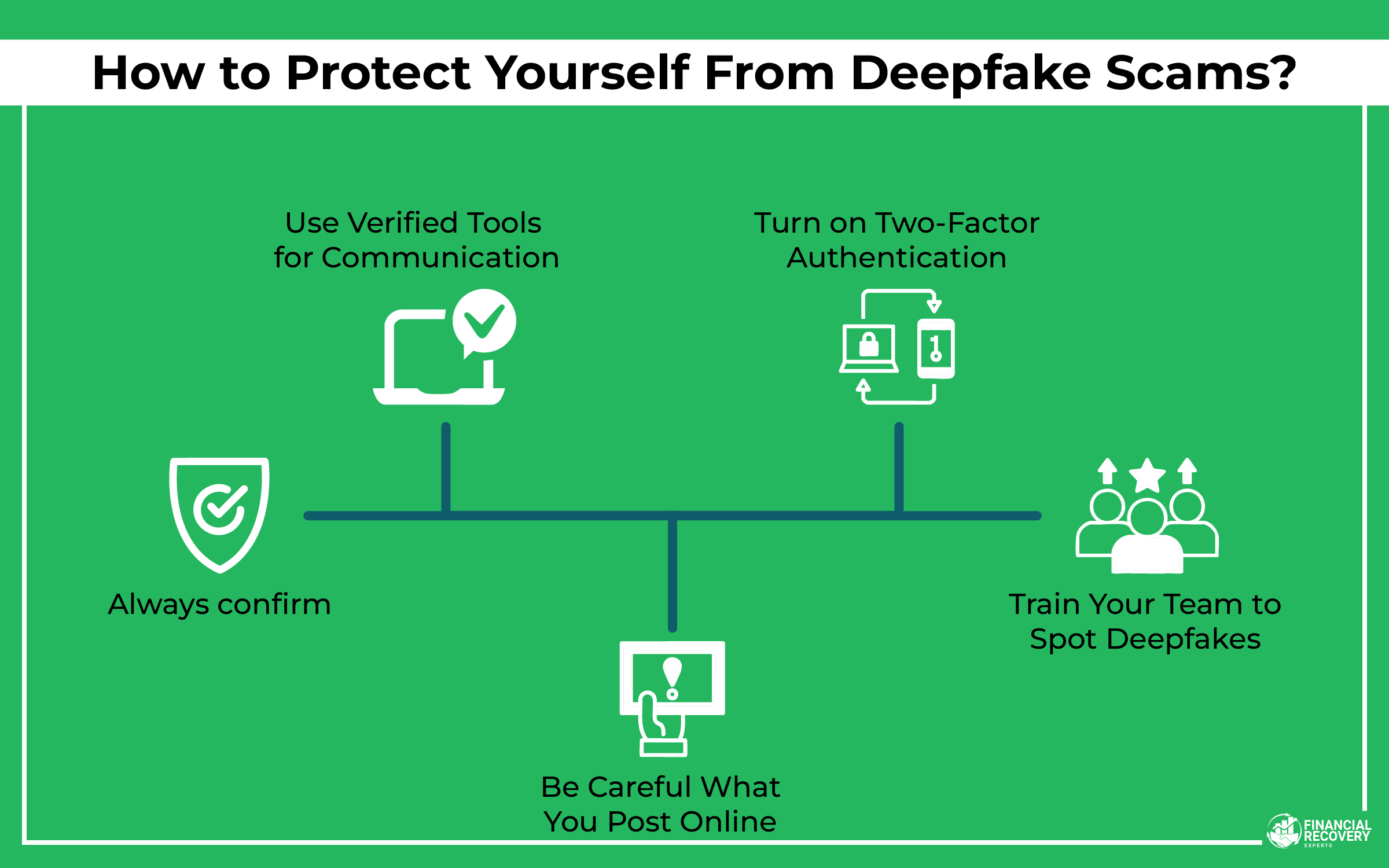

5 Ways to Protect Yourself?

You don’t need to ditch tech to stay safe. Just slow things down and pay attention to what’s really happening. Scammers count on people reacting fast, so don’t give them that chance.

1. Take a Moment Before Responding

Let’s say you get a video or a voice note. Sounds urgent, maybe someone needs money, a password, or a quick file. Could be a friend, your boss, whoever. Looks and sounds real. But before doing anything, stop. Call them. Text on another app. Use whatever you normally use to reach them. A scam only works if you trust it without checking.

2. Stick to What You Know

For anything sensitive, such as calls, files, or logins, use tools you recognize. Zoom, Slack, Teams. These platforms aren’t perfect, but they’re better than sketchy links or apps you’ve never heard of. If someone’s rushing you to join through some weird site or download something unfamiliar, that’s a red flag right there.

3. Think Twice About What You Share

Posting a funny clip or a quick voice message seems harmless until someone else grabs it and uses it to sound like you. Happens more than people think. Even old content can be scraped from your profile. If you don’t want a stranger using it, maybe don’t leave it out in the open.

4. Add an Extra Lock to Your Accounts

Passwords are easy to guess or steal. That’s just the truth now. Two-factor authentication gives you backup. Even if someone has your login, they’re stuck without that second code. And yeah, use an app or a security key, not text messages. It’s a small step that makes a huge difference.

5. Talk to Your Team About It

In a company setting, especially if you’re dealing with money, clients, or private info, people need to know what to look for. That means real training, not just a PDF nobody reads. Show them what a fake might look like. Encourage questions. Nobody should feel bad for being cautious. That’s how you stop the slip-ups.

What to Do If You have Been Scammed?

Scammers rely on silence. Acting quickly can make a difference.

- Cut All Contact Immediately: End the call, block the sender, and don’t reply. Any continued engagement gives scammers more ground.

- Report the Scam: Notify your company, bank, or platform right away. Also, file a report with your local cybercrime unit. In the U.S., report to the FTC or IC3. In the U.K., use Action Fraud.

- Secure Your Accounts: Change passwords, activate 2FA, and review any recent activity. If financial details are shared, alert your bank immediately.

- Preserve Evidence: Save videos, messages, screenshots, and anything that shows the interaction. It helps investigators and may support recovery attempts.

- Consider Recovery Services: In some cases, especially involving large sums or crypto, fraud recovery firms like Financial Recovery Experts can help trace digital transactions across blockchains and work with relevant platforms to flag or freeze stolen assets quickly. Their specialized tools and rapid-response process make them a practical option when time is critical.

How Scammers Are Turning Deepfakes into a Business?

For some, deepfake scams aren’t random; they’re structured operations. There are now scam farms where people are trained to run deepfake campaigns. Some use AI tools to generate thousands of faces and voices. Others rent deepfake software to carry out custom attacks.

Stolen social media content is turned into scripts. Scammers then feed these into voice cloning and face mapping tools. The result is a fake version of someone that feels real enough to fool even close contacts. This isn’t just tech misuse; it’s organized deception and fast scaling.

FAQs (Frequently Asked Questions)

Yes, they can, and that’s one of the scariest parts. Some tools let someone appear on a call in real time, looking and sounding like another person. It's not just a recorded video either; the face and voice actually react as the scammer speaks. If you’re not paying close attention, it’s easy to miss small signs that something’s off.

Not really. Some of them still have weird glitches, like awkward blinking, strange lighting, or lips not quite matching the words. But when the scam is quick and the resolution is low, people miss those things. And the best ones? They're pretty hard to catch without slowing them down or looking really closely.

Most of the time, they don’t even need to dig that deep. Voice clips are all over the internet, from interviews, podcasts, online videos, or even just a voicemail greeting. A few minutes of clean audio is usually enough to build a clone. It’s a good reminder to be careful about what’s shared publicly.

Finance is a big one, especially banks and crypto platforms. But law firms, remote work environments, and even customer service teams are getting hit too. Any place where trust matters and decisions happen quickly. If someone’s identity can be faked, it opens the door.

Some tools are built for that, yes — they look for odd movements, lighting mismatches, and other signs that a video’s been altered. But it’s not foolproof. A well-made deepfake can still get past those checks, especially in real-time calls. That’s why gut instinct and verifying requests the old-fashioned way still matter.